Stanford Pratical Machine Learning-模型评估

本文最后更新于:7 个月前

这一章主要介绍模型评估!!!

Model Metrics

- Loss measures how good the model in predicting the outcome in supervised learning

- Other metrics to evaluate the model performance

- Model specific: e.g. accuracy for classification, mAP for object detection

- Business specific: e.g. revenue, inference latency

- We select models by multiple metrics

- Just like how you choose cars

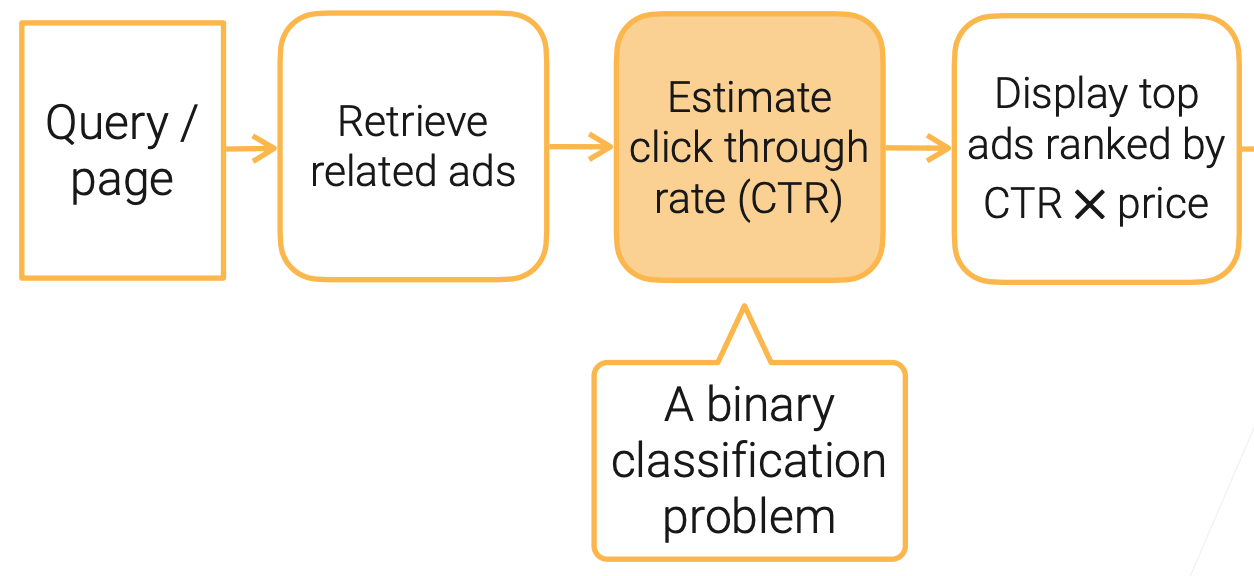

Case Study: Displaying Ads

- Ads is one major revenue source for Internet companies

指标!

Metrics for Binary Classification

建议看看:准确率,精度,召回率等

- Accuracy: # correct predictions / # examples

准确率,预测正确类别的数量是多少,占所有预测的比例是多少。

Sum(y_hat == y) / y.size

- Precision: # True positive / # (True positive + False positive)

精度:对于某一个具体的类来分析的,预测正确的个数所占比例。

Sum((y_hat == 1) & (y = 1)) / sum(y_hat == 1)

- Recall: # True positive / # Positive examples

召回率:对于某一个具体的类来分析的,所有正确的样本中,我们预测对了多少所占比例。

Sum((y_hat == 1) & (y = 1)) / sum(y == 1)

- Be careful of division by 0

- One metric that balances precision and recall

- F1: the harmonic mean of precision and recall: 2pr/(p + r)

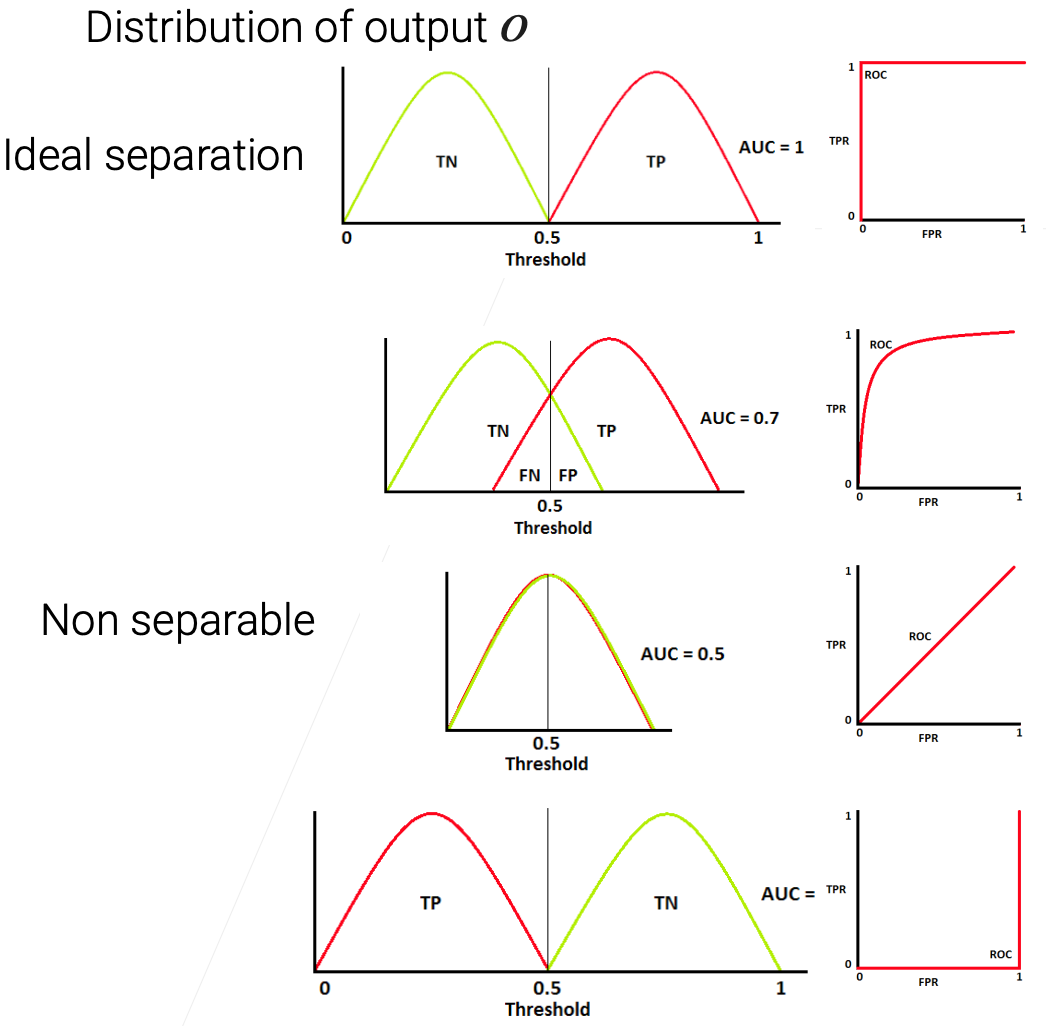

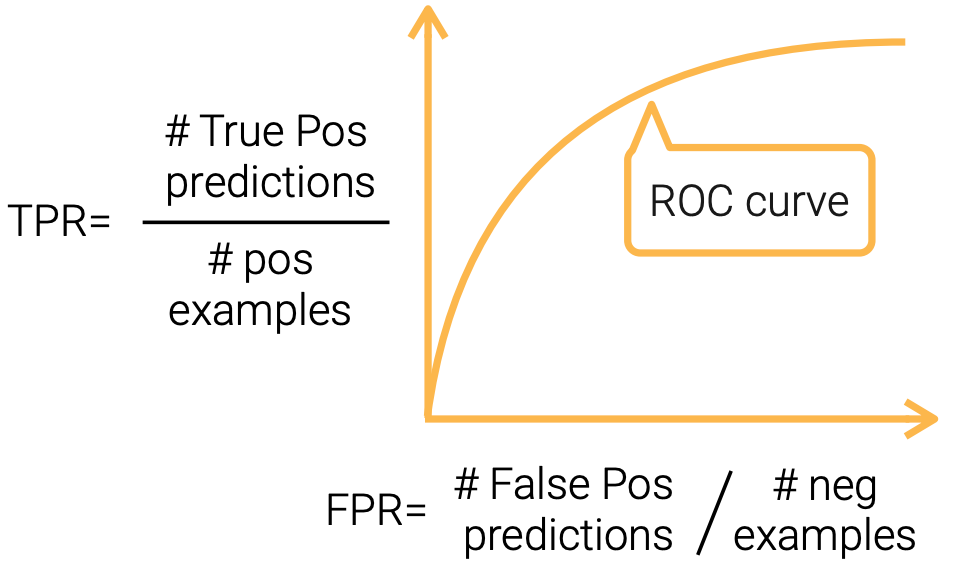

AUC-ROC

建议看看:ROC曲线与AUC值

- Measures how well the model can separate the two classes

- Choose decision threshold $\theta$, predict positive if $o > \theta$ else neg

- In the range [0.5, 1]

Business Metrics for Displaying Ads

- Optimize both revenue and customer experience

- Latency: ads should be shown to users at the same time as others

- ASN: average #ads shown in a page

- CTR: actual user click through rate

- ACP: average price advertiser pays per click

- revenue = #pageviews x ASN x CTR x ACP

- matters to whom:

- revenue, pageviews -> Platform company

- ASN, CTR -> User

- CTR, ACP -> Advertiser

Displaying Ads: Model Business Metrics

- The key model metric is AUC

- A new model with increased AUC may harm business metrics, possible reasons:

- Lower estimated CTR -> less ads displayed

- Lower real CTR because we trained and evaluated on past data

- Lower prices

- Online experiment: deploy models to evaluate on real traffic data

Summary

- We evaluate models with multiple metrics

- Model metrics evaluate model performance on examples

- E.g. accuracy, precision, recall, F1, AUC for classification models

- Business metrics measure how models impact the product

References

Stanford Pratical Machine Learning-模型评估

https://alexanderliu-creator.github.io/2023/08/25/stanford-pratical-machine-learning-mo-xing-ping-gu/