Stanford Pratical Machine Learning-Bagging

本文最后更新于:1 年前

这一章主要介绍Bagging和相关的知识,本质就是一种训练多个模型,然后去做决策和平均的策略和算法。主要目的是通过多个模型的决策结果来减少方差。不稳定模型 -> 稳定模型。

Bagging - Bootstrap AGGregatING

这些模型的训练是独立的,可并行的昂!!!

- Learn n base learners in parallel, combine to reduce model variance

- Each base learner is trained on a bootstrap sample

- Given a dataset of m examples, create a sample by randomly sampling m examples with replacement

- Around $1 - 1/e \approx 63%$ unique examples will be sampled use the out-of-bag examples for validation

- Combine learners by averaging the outputs (regression) or majority voting (classification)

- Random forest: bagging with decision trees

- usually select random subset of features for each bootstrap sample

Bagging Code (scikit-learn)

- Code

1 | |

Random Forest

- A case of bagging, use decision tree as the base learner

- Ofter randomly select a subset of features for each learner

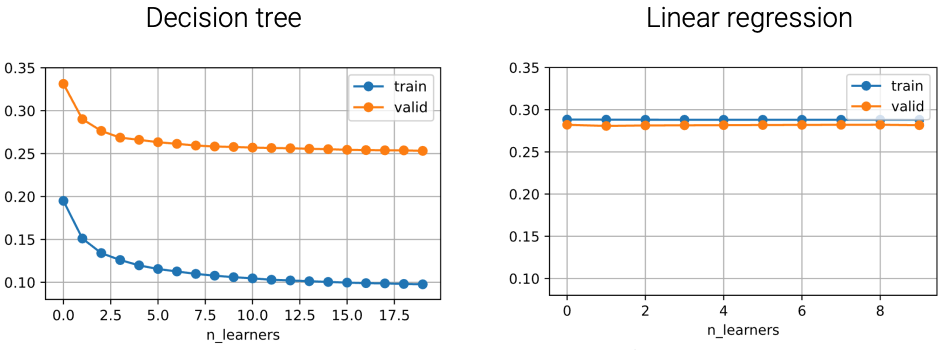

Apply bagging with unstable Learners

方差比较大的模型,被我们称为unstable模型。

- Bagging reduces model variance, especially for unstable learners

- Given ground truth $f$ and a set of base learners $\hat{f}_D$, for regression, bagging prediction: $\hat{f}(x) = E_D[\hat{f}_D(x)]$

- Given $(E[x]) ^ 2 \le E[x^2]$, we have

$$

(f(x) - \hat{f}(x)) ^ 2 \le E[(f(x) - \hat{f}_D(x)) ^ 2] \simeq \hat{f}(x) ^ 2 \leq E[\hat{f}_D(x) ^ 2]

$$

$f(x) - \hat{f}(x)$ is with bagging, $(f(x)-\hat{f}_D(x))$ is single learner.

Unstable Learners

- Decision tree is unstable, linear regression is stable

References

Stanford Pratical Machine Learning-Bagging

https://alexanderliu-creator.github.io/2023/08/26/stanford-pratical-machine-learning-bagging/