Stanford Pratical Machine Learning-数据变换

本文最后更新于:7 个月前

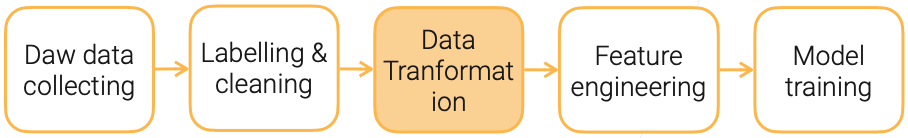

这一章主要介绍数据变换

Data Transformation

- ML algorithms prefer well defined fixed length, well-conditioned, nicely distributed input

- Next, data transformation methods for different data types

Normalization for Real Value Columns

Normalization makes training more stable

Min-max normalization: linearly map to a new min a and max b

$$

x’_i = \frac{x_i - min_x}{max_x - min_x}(b - a) + a

$$Z-score normalization: 0 mean, 1 standard deviation

$$

x’_i = \frac{x_i - mean(x)}{std(x)}

$$Decimal scaling

$$

x’_i = x_i/10^j \ smallest\ j\ s.t.\ max(|x’|)\ <\ 1

$$Log scaling

$$

x’_i = log(x_i)

$$

Image Transformations

- Our previous web scraping will scrape 15 TB images for a year

- 5 millions houses sold in US per year, ~20 images/house, ~153KB per image, ~1041x732 resolution

- cropping, downsampling, compression

- Save storage cost, faster loading at training

- At ~320x224 resolution, 15 TB -> 1.4TB

- ML is good at low-resolution images

- Be aware of lossy compression

- Medium (80%-90%) jpeg compression may lead to 1% acc drop in ImageNet

- Save storage cost, faster loading at training

数据质量和数据大小,必须做一个权衡。数据大小直接关系到数据存储成本,数据质量直接关系到模型训练精度。

- Image whitening

- Generalized normalization of vector values

- Pixels in local neighborhood are highly correlated.

- Whitening removes redundancy through linear transformations

- Vector x has mean 0 and covariance estimate $\sum$

- y = Wx, st $W^TW = \sum^{-1}$ has unit diagonal covariance

- Common choices of whitening matrix: Eigen-system of $\sum(PCA)$, $\sum^{-\frac{1}{2}} (ZCA)$

- Model converges faster with whitened image input

- Especially for unsupervised learning, e.g. GAN

Video Transformations

- Input variability high

- Average video length: Movies ~2h, YouTube videos ~11min, Tiktok short videos ~15sec

- Preprocessing to tradeoff storage, quality and loading speed

- Tractable ML problems with short video clips (<10sec)

- Ideally each clip is a coherent event (e.g. a human action)

- Semantic segmentation is extremely hard.

- Common practice: decode a playable video clip, sample a sequence of frames, compute spectrograms for audio

- Easy to load to model, increased storage space

Encode和Decode有的时候也要权衡,压缩好存储小,但是反向解码和处理开销也大捏!

Text Transformations

- Stemming and lemmatization: a word a common base form

- E.g. am, are, is -> be car, cars, car’s, cars’ -> car

- Example: Topic modeling

- Tokenization: text string -> a list of tokens (smallest unit to ML algorithms)

- By word: text.split(‘ ‘)

- By char: text.split(‘’)

- By subwords:

- e.g. “a new gpu!” -> “a”, “new”, “gp”, “##u”, “!”

- Custom vocabulary learned from the text corpus (Unigram, WordPiece)

字典构造 & 词元化

Summary

- Transform data into formats preferred by ML algorithms

- Tabular: normalize real value features

- Images: cropping, downsampling, whitening

- Videos: clipping, sampling frames

- Text: stemming, lemmatization, tokenization

- Need to balance storage, quality, and loading speed

References

Stanford Pratical Machine Learning-数据变换

https://alexanderliu-creator.github.io/2023/08/24/stanford-pratical-machine-learning-shu-ju-bian-huan/